I have just installed ZFS on my Zimacube and need some advice about how to best utilize its features. I am new to ZFS and still figuring it out. I have 6 3TB HDs in slots 1-6, which are in 1 pool with 2 RAIDZ1 VDEVs. After reading more about ZFS, I am wondering if I should use slot 7 for a ZIL or SLOG VDEV. Does anyone have advice or opinions for a noob about any of this?

I’m trying to implement ZFS on ZimaCube. I will post my steps when I succeed.

None of the special vdevs provide much value in a typical home/homelab environment compared to having a fast NVMe pool.

Even a single drive NVMe pool works great just make sure you have a good backup processes for any data you’d be sad to lose (which you should already be doing for all your data anyway.) I just snapshot my single drive NVMe pool more frequently to my spinner pool since the chances of losing a single-drive pool are higher

Statement: I contacted the ZimaOS development team and obtained internal tools. According to the info, if you also want to implement ZFS on ZimaOS, you may need to go through one or two minor system version iterations.

Sorry, I hope I’m not late.

As for ZFS itself, based on my understanding, you need to first establish a storage pool, and then establish a ZFS file system on top of the storage pool. Let’s get started.

Establish storage pool

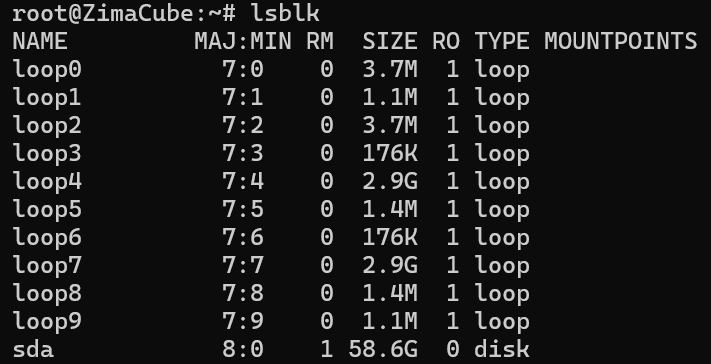

Connect an external drive to ZimaCube. Use the lsblk tool to list all the drives. You can find the drive you just connected by controlling the variables.

Here, my USB drive is shown as sda .

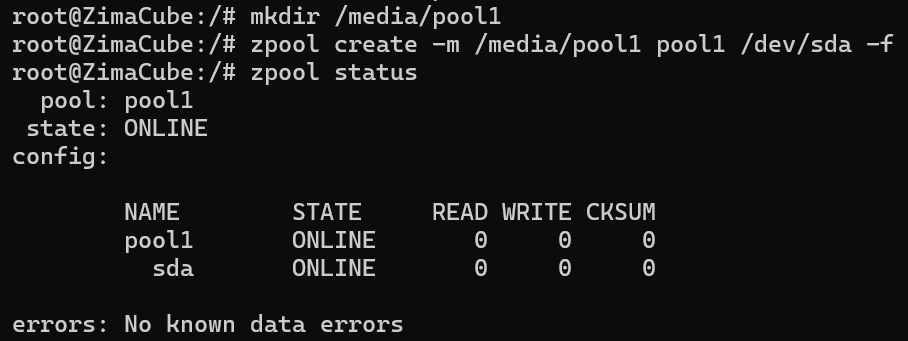

Use this command to create a storage pool.

# You may need to remove all the data on your disk firstly:

dd if=/dev/zero of=/dev/sda bs=1M count=10

# Since the root directory is read-only under most circumstances on ZimaOS,

# mannually make a directory under /media for mounting.

mkdir /media/pool1

# Create your pool:

zpool create -m /media/pool1 pool1 /dev/sda -f

# Also, you may need these for later removal purpose:

zpool export pool1

zpool destroy pool1

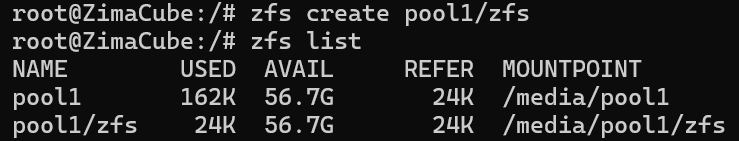

Establish ZFS on storage pool

# Create ZFS on the storage pool created:

zfs create pool1/zfs

# Use command below to show zfs list

zfs list

I chose to create the storage pool and zfs in the /media directory so that you can easily use ZFS on ZimaOS.

how did you install zfs on zimaOS - it seems to not have apt command, or i am missing some assumption here…

Just received the news from IW that the ZFS packages will be included in the beta version of the ZimaOS to be released next Friday. It will be available soon!

Thats great news, still doesn’t explain how you installed it give there is no package manager, what was your trick do that? Will they have a zfs UI too?

See the statement part. ![]() Or, wait till next week.

Or, wait till next week.

Oh you got privates and proceeded to tell the rest of us how to do something none of the rest of us could possibly do, got it, do you like snatching candy from babies too ![]()

If you are new to ZFS like me, the thing everyone who knows much more about ZFS has said to me is don’t do anything fancy at home, you’re more likely to make things worse, waste money or just add ways for your data to be lost or partially corrupted.

There are few things in home use that will get any benefit out of special stuff, with the exception of putting the metadata on SSD. But you need to protect that in the same way as other data as if you lose that then all your data is gone.

Maybe start from a different direction - what is not currently working well for you, because of slowness? And then maybe people can suggest options if they are familiar with ZFS

being totally new and playing with it on truenas on the zimacube you are right - i added a metadat pool only to find out once you have done that it can’t be removed (unlike the LARC cache) also seems other caches only matter if you are doing block device functions instead of fileshares

that said it seems pretty simple to setup a single ZRAID1 vdev which can be expanded by replacing each drive, and with the new feature by adding drives (with only downside being manual rebalancing of the data using a script).

So for me it comes down to what will be more reliable, what will scrub the fastest, and what will rebuild the fastest when comparing ZFS ZRAID1 with say BTFRS RAID 5.

ok i created a 5 wide zraid-2 vdev with mirrord special vdev

i created a dataset and mounted that to /DATA/backups

life was good until i rebooted, after doing that there is nothing in /DATA/backups other than the top level folders that were copied over, but they are entirely empty

This is the output of zfs list and zfs mount - i don’t understand the /var/lib mount point - i think the ro filesystem of ZimaOS messed with my ZFS some how on shutdown / reboot - there is 765G of data but no seeming way to access it… any ideas?

bash-5.2# zfs list

NAME USED AVAIL REFER MOUNTPOINT

firecracker 765G 63.7T 170K none

firecracker/backups 765G 63.7T 765G /DATA/backups

bash-5.2# zfs mount

firecracker/backups /DATA/backups

firecracker/backups /var/lib/casaos_data/backupson further investigation:

when i du /DATA/backups i see all the files, when i do ls /DATA/backups i see nothing, this seems like a bad bug…