I’m looking to create a JBOD setup because I don’t want to use RAID 0. My understanding is that JBOD combines all my disks into one pool and stores data on one disk until it gets full, then moves to the next disk. In contrast, RAID 0 stripes data across multiple disks, meaning a single disk failure would cause the entire array to fail.

To be clear, I’m not looking for redundancy for my data. I’m looking for most efficiency but still reduce total failure.

I’m interested in that, too.

AFAIR, ZimaOS offered mergerfs, but it was removed afterwards.

mergerfs is still there. I was able to create a mount point but now I don’t know how to enable SMB for this on ZimaOS.

Found a way.

# Created a mount point directory in the /DATA/

mkdir -p /DATA/PoolDrive/SlowDrive

# Use mergerfs

mergerfs -o defaults,allow_other,use_ino,category.create=mfs /DATA/.media/HDD-Storage:/DATA/.media/HDD-Storage1 /DATA/PoolDrive/SlowDrive

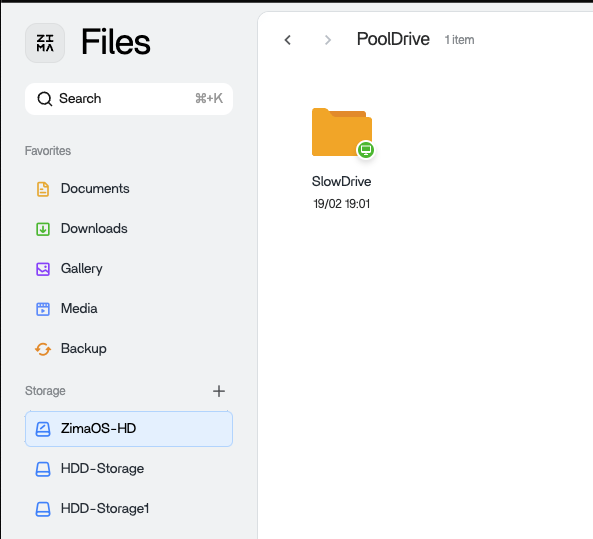

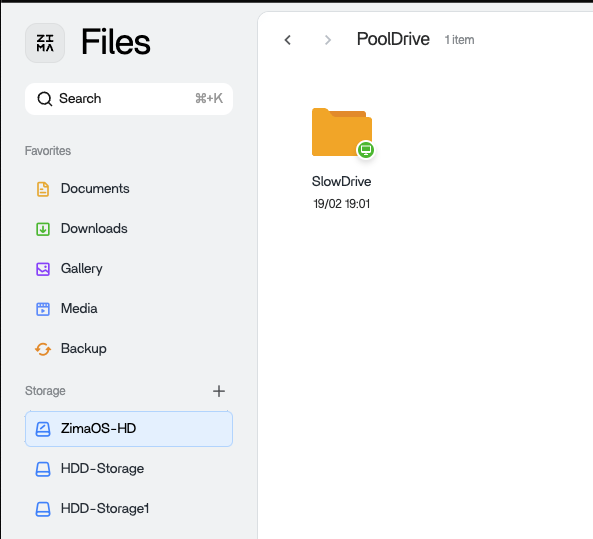

Then I simply create a new SMB share in File UI. This SlowDrive shows on the ZimaOS-HD.

To make it persistent and startautomatically when the machine reboot, I created a service. I tried fstab too but I can’t make it work so I just resort with running it as a service.

nano /etc/systemd/system/mergerfs-slowdrive.service

[Unit]

Description=mergerfs for pool drive

After=network.target

[Service]

Type=oneshot

ExecStartPre=/bin/sleep 10

ExecStart=/usr/bin/mergerfs -o defaults,allow_other,use_ino,category.create=mfs "/DATA/.media/HDD-Storage*" /DATA/PoolDrive/SlowDrive

ExecStop=/bin/fusermount -uz /DATA/PoolDrive/SlowDrive

RemainAfterExit=true

[Install]

WantedBy=multi-user.target

Rather than create a new service, my solution was to add a JBOD series of 4 disks, each named uniquely…as I had 20TB of HDDs. I plugged them into consecutive SATA slots by size and added each one successively. For instance 8TB-Seagate, 5TB-Seagate, 4TB-Toshiba, etc. They then all became SAMBA shares which could be accessed when I added the NAS’s server name ZimaOS.local and user credentials into Windows credentials. You could then fill them up in whatever order you choose and rotate files and folders around to use or open up space – or just rotate drives in and out of storage when you power cycle the full device.

I like automated my drive pool. I was able to achieve it with MergerFS + SnapRaid. I really like this setup, and it might be a better option for me than the UnRaid setup I had before.

MergerFS provides me with a single pooled drive that I can use to create an SMB share or multiple SMB shares (subfolders). For redundancy, I use SnapRaid, which is very efficient since I only need one disk to provide redundancy the entire drive pool. The SnapRaid parity disk doesn’t need to be the same size as my drive pool; it only needs to be larger than or equal to the largest disk in my pool.

My SnapRaid setup runs every night, syncing the parity if the threshold changes are within acceptable limits. Cancel the sync if not and perform manual review. Just extra safety incase a large volume of file got accidentally deleted. It also sends me an email report of the sync.

A very skillful setup and resourceful use of SnapRaid. Guess I am just wary of layering in something else in case a component or not so new HDD in my 9 year old system fails. You’ve prompted me to fully research MergeFS to see if I can manage it properly. Only recently discovered the simplicity of ZimaOS and that it fit into reviving a bunch of old hardware I assumed was was obsolete and turning it into a NAS and docker capable machine.

In your case, MergerFS is actually a really good fit. The beauty of it is that it doesn’t even care what file system each of your hard drives is formatted with—you can have different types of drives in your pool without any issues.

This guide helped me a lot with setting things up: Setting up SnapRAID on Ubuntu to Create a Flexible Home Media Fileserver | Zack Reed

I followed its instructions for mounting the parity drive because I didn’t want to use ZimaOS’s method. Mounting it the ZimaOS way would add the total disk space to the dashboard and automatically mount it as a shared SMB, which I wanted to avoid.

The rest of my drives that are part of the pool are mounted using the ZimaOS method.

There are a few challenges with ZimaOS since it’s very minimal:

- No package manager out of the box—you need to install

opkg. I needed opkg to update MergerFS (less work than compiling), install git, email client (mutt) and other useful tools. However, even with opkg installed, the available packages are very limited. For example, SnapRAID isn’t available, so I had to compile and install it manually.

- No

cron, so I had to use a service timer instead.

- I had to tweak some default

.ssh files to enable password-less SSH access to the server.This also let me use remote Visual Code (which is actually handy for editing config files), disabled the annoying bash_history message, and made other adjustments.

With all these advanced changes, I was actually considering using Debian Linux to make life easier  , but I still decided to go with ZimaOS.

, but I still decided to go with ZimaOS.

I really like the Thunderbolt connection to my Mac Mini M1, which gives me very fast access to my storage. That was the main reason I got the ZimaCube Pro.